About

Hey there...

I'm Hari Janardhan Vanka, experienced Data Analytics Analyst/Engineer/Consultant/Specialist at Discover Financial Services.

Implemented Data Engineering/Analytics Principles in Financial domain specifically in Fraud by working on Automating ETL process by building/maintianing real-time data processing streaming pipelines on ingesting data from datalake/data sources.

> Optimization on by creating functions to process or load data from PySpark data frames into Hive or Impala tables.

> Used to pre-process the data from ISA to HDFS file format, apply tranformation technique to create parquet for data cleansing and normalization and create jars upon it.

>Designed dashboards and data visualizations using the Dax Commands to communicate meaningful metrics with Power BI.

> Maintained the Extracted data from Splunk API and performed data cleansing and loading into tables in a desired format.

> Experience on using SAS interface working on SAS procedural SQL, Programming & multiple SAS certifications in the bucket.

> Well Equipped with Credit Risk Management Objectives, quality control analysis, credit modelling , credit risk mitigation strategies.

> Experience on using SalesForce CRM Cloud with administrative and developlment works and Ingested data from data sources using combination of SQL, Salesforce API using python to create data views to be used in Tableau.

Work Experience

Discover Financial Services - Fraud Specialist from March 2025

PNC Bank - Data Analytics Analyst from July 2024

Regions Bank - Data and Analytics Analyst from Oct 2023

3S Business Corporation Inc - Data Engineer from Sept 2023

Graduate Student Assistant - from Feb, 2022

Innova Solutions - Software Enginner from Mar, 2021

Trailhead Systems - Salesforce CRM Analytics Developer from Sept, 2020

TheSmartBridge - IOT Engineer from May, 2019

Education

Pursuaded Masters in Computer & Information Sciences, functionally Data Science at [ University of Alabama at Birmingham ]. During the course work, me being an aspiring student always wants to stacked up with Data Mining, Machine Learning, advance web development, Big data, cloud computing, advance algorithms, Database Systems & App development, Network Security concepts and explored new technologies in the IT Hub.

I enjoy learning new advancements in technologies and implemented Live Streaming Projectson various clouds, have explored into Data Science and Machine Learning Algorithms and implemented AI concepts in my projects.

I have attended multiple workshops and interships in various colleges as well as corporates in the industry. I have acquired skills from various sources. For example, I started implementing projects using kaggle data.

Skills

Programming Languages - Python, Java

Query Languages - MySQL, SQL, SOQL, SOSL

Databases - MySQL, Mango DB, SQL server, PostgreSQL, MariaDB

Big Data Technologies - Apache Spark, Spark SQL, Apache Hive, Apache Impala, Apache Kafka, Apache Kudu, HDFS, SBT, Postman, MapRGit, Scala, BitBucket, GIT, GITLAB, Harness, JFrog, Airflow, Splunk Api, Alteryx.

Cloud Technologies - AWS - {S3, DynamoDB, RDS, EMR, Athena, Glue, Redshift}, AZURE, DataBricks, Snowflake, Cloudera, GCP.

Visualisation Tools – Tableau, Power BI, SAS BI, MicroStrategy, Excel

Web Development Languages - Html, CSS, JavaScript, Django, Flask

CRM SalesForce Cloud - Administrator, Developer, SAP Business Objects.

Operating Systems - Windows – [xp, 2007, 10, 11] and Linux

Methodology - Agile (Atlassian - Jira, Confluence, Kanban Board)

IDE’S - Sublime Text, PyCharm, IntelliJ, Node.js, Anaconda, VS code, Intellij.

Computational Skills - MS Office

With hard work and grit, I gained valuable skills and solved problems. Whether it's finding the most elegant way to write a line of code or figuring out which chord fits best into the progression and discovering solutions.

DS ML AI Full Stack

Latest Implementations

Stock Market Kafka Live Streaming Pipeline

Implemented a streaming pipeline using Apache Kafka to stream the whole data into the Aws bucket in real time scenario and then used AWS Glue, Crawler and Athena to query and manipulate the data.

Dataset -> Stock Market app simulation -> Producer -> Kafka -> Customer -> S3 -> Crawler -> Glue -> Athena.

---> GITHUB LINK <---

Youtube Data Analysis

Streamline Youtube analysis on structured and semi-structured youtube videos data from data ingestion to building etl pipeline to visualize data in QuickSight.

Dataset -> Data Ingestion (S3) -> Lambda -> Data Catalog -> Job-> Athena -> Quicksight.

---> Kaggle Link <---

---> GitHub Link <---

Credit Risk Analysis

Implemented with risk dataset, where to analyse whether person’s loan can be approved or rejected classifying using Random Forest Classifier and Lightgbm classifier.

---> GitHub Link <---

DE on Twitter Data Using Airflow

Using Twitter Api tweepy data with python and deploy in Airflow on building workflows and ingested data through ec2 into s3.

Twitter API -> Python -> Apache AirFlow -> Ec2 -> AWS S3.

---> GitHub Link <---

Flood Annotaion

Annotating the satellite images to train machine learning models for flood extent mapping, so tried to map as much as flooded and dry lands images which are saved in terms of pixels in json format.

While annotating we use segmentation based Topological Data Science and elevated guided BFS concepts.

resources - ---> Login Link <---

---> GitHub Link <---

Uber Trajectory Annotation

In Birmingham Uber trip data collected from uber driver's historical trips from their smartphones.

Annotation is done by using Georeferencer.com to recover the trip information from those screenshots images for the data analysis for future accompletions.

Resources - Georeferencer.com, any image to text convertor

---> GitHub Link <---

DonorChoose.org Applicaiton

DonorChoose.org receives thousands of project proposals each year for class room project in need of funding to automate screening processes and produce results quickly and improving customer(teachers) experience for project vetting.

Algorithms chosen - Naive Bayes,

Logistic Regression,

XGBoost

---> Kaggle Link <---

---> GitHub Link <---

Full Stack App - Foodie

Developed Web Application Called Foodie where users can order food online. Included features like from ordering food, sign in/up, rate/review, payment methods, order history, favorite food.

Technologies used – Python-Django, Postgresql, Html, CSS, Bootstrap, jQuery, JavaScript.

---> GitHub Link <---

Tableau Implementaions

Tableau is used to Connect almost any database to create and show insights.

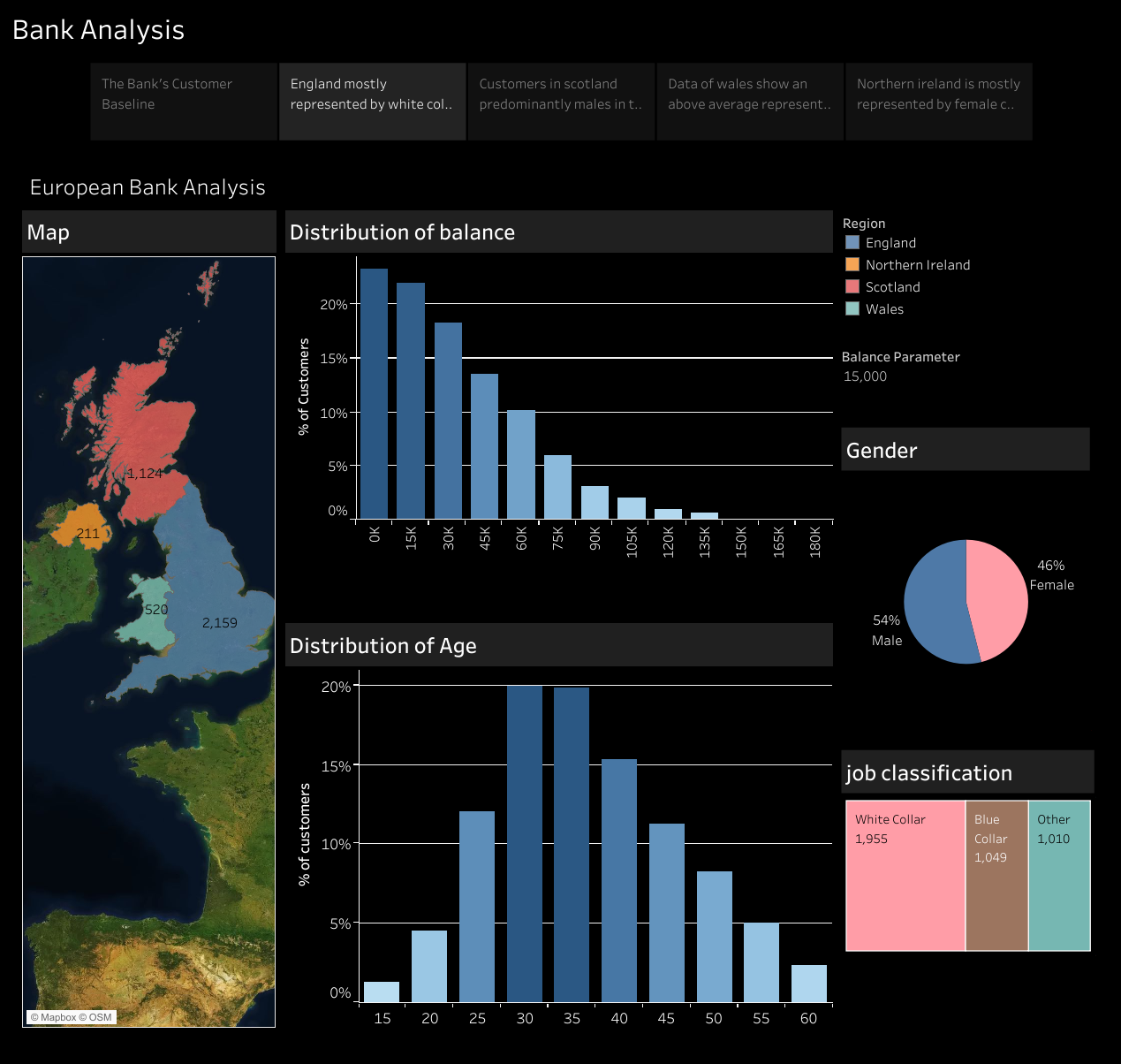

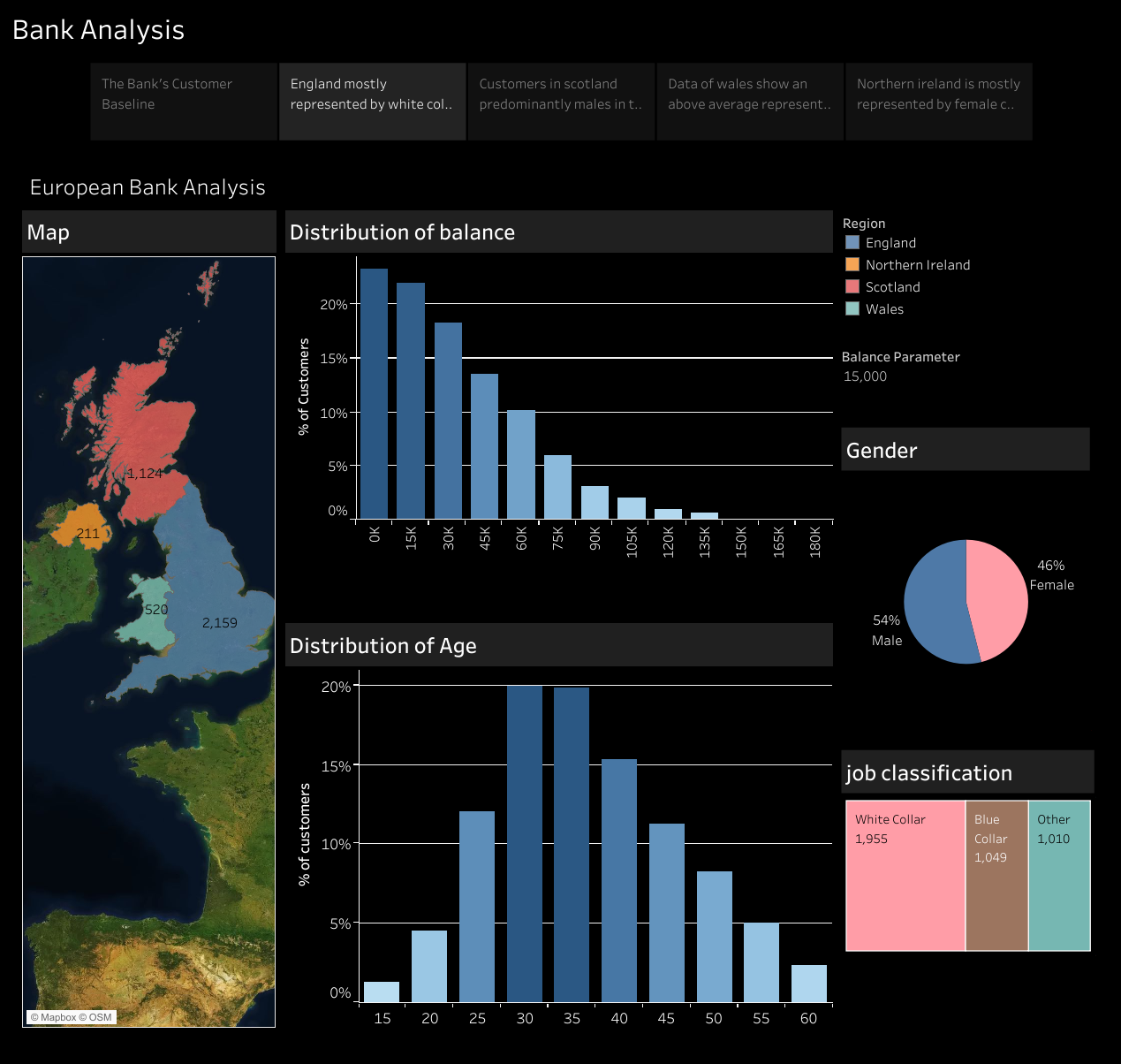

1. Bank Analysis -

• Data given is an imaginary bank operating in the UK. Analyze how the different regions are represented in the customer base. Basically what the customers look like in the different regions so they can assess who is interested in their bank in these different areas of the uk and how to better service their customers.

• Process include Table calculations Dashboard and Storytelling.

• Dataset - Today's dataset is dummy data for an imaginary bank operating in the UK.

• Dataset consists of [Customer Id, Name, Surname, Gender, Age, Region, Job Classification, Date Joined, Balance].

---- You can click on below visual to get redirected to tableau public ---

--- You can access Tableau file and Documentation from the links given below --

--- You can access Tableau file and Documentation from the links given below --

https://public.tableau.com/profile/vanka.hari.janardhan#!/

https://github.com/V-H-J/Tableau-Implementations/tree/master/Bank%20Analysis

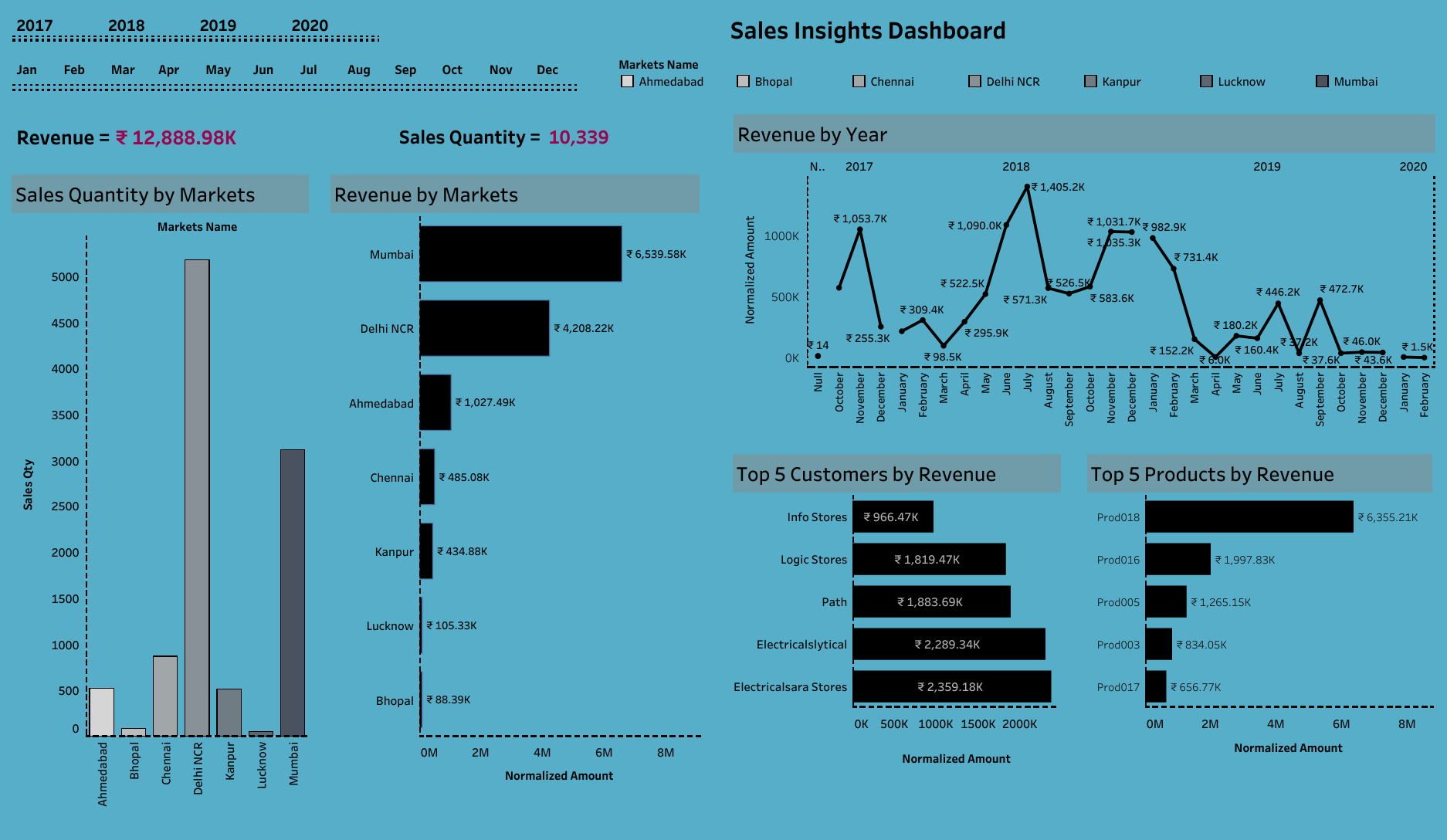

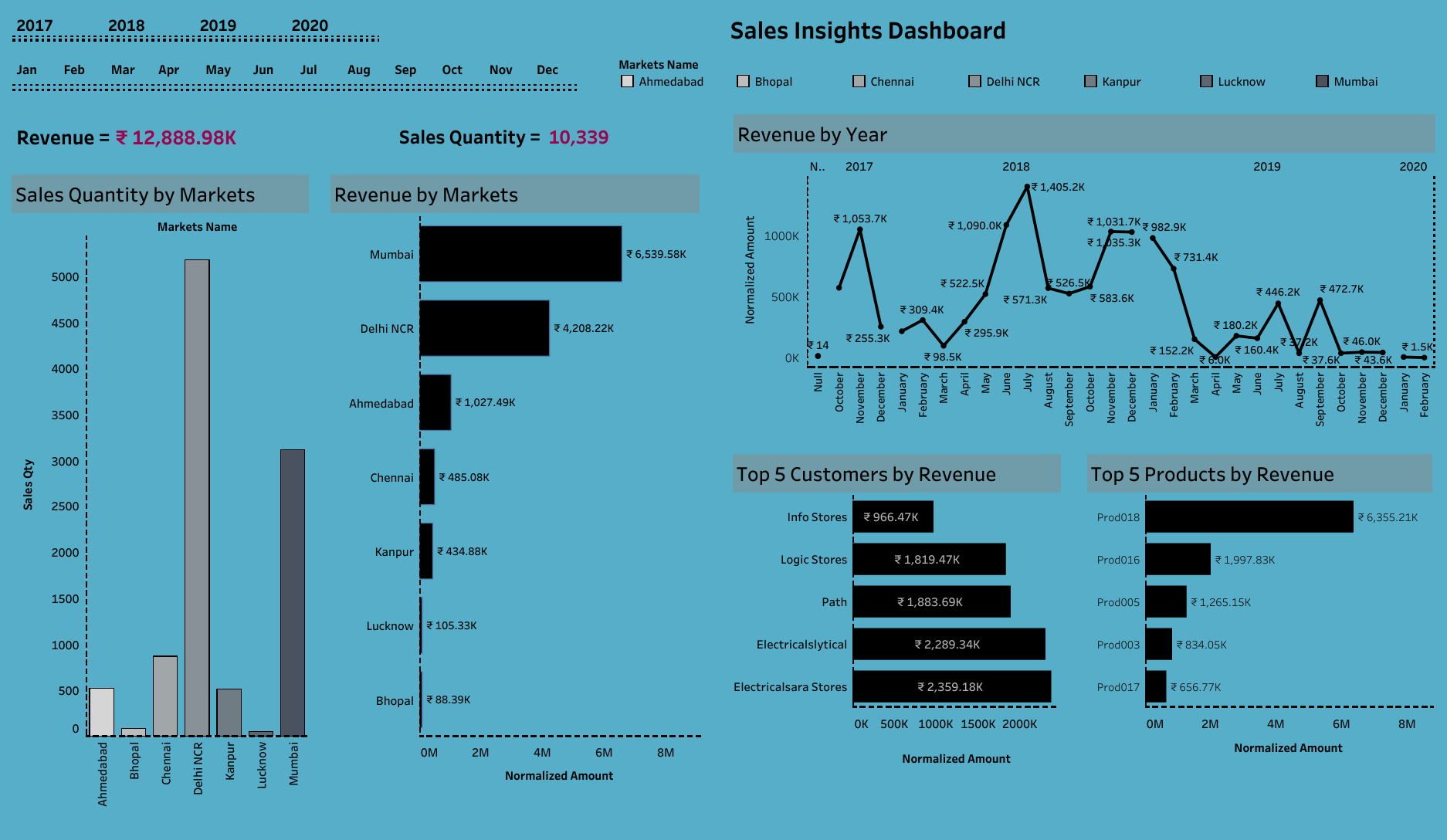

2. SALES Insights -

Problem Statement -

• Atliq Hardware is a company, which supplies hardware peripherals to different clients like nomad stores, electrical stores, wholesale stores etc. Tableau or Data Analyst will help to see sales insights of all regional branches with their clients and their performances and decide will decice for the betterment.

--- You can click on below visual to get redirected to tableau public ---

--- You can access Tableau file and Documentation from the links given below --

--- You can access Tableau file and Documentation from the links given below --

https://public.tableau.com/profile/vanka.hari.janardhan#!/

https://github.com/V-H-J/Tableau-Implementations/tree/master/Sales_Insights

Heart Disease Classification

Still working on it for better accuracy...

1. Problem Definition In a statement –

• Given clinical parameters about a patient, can we predict whether or not they have heart disease?

• Dataset from the UCI Machine Learning Repository...

• This Dataset is available on Kaggle.

- URL - https://www.kaggle.com/ronitf/heart-disease-uci

You can access the code from the below given link....

--- https://github.com/V-H-J/Heart-Disease-Classification

ML Supervised Algorithms Implementations

Regression Algorithms --

Classification Algorithms --

Major project

DATA SCIENCE PROCESS PIPELINE TO SOLVE EMPLOYEE ATTRITION AND THEIR JOB PERFORMANCE AND PREDICTING WITH AI

Objective -

• Retention of valuable employees within an organization has become an important issue as it is hard to find out the reasons that why employees are leaving an organization and keep them satisfied is a big challenge, for this a report is made to predict the retention of an employee in an organization using the python programming with data science methods.

• The main idea of this report is to find out that which valuable employee will leave the company and the features which are affecting him/her to making this decision like salary level, no. of hours spending in week, promotion etc. The application was developed in python programming and are made with the help of data science and machine learning models.

---> You can click on below links to check Project Code and Documentation ---

https://github.com/V-H-J/Data-Science-Process-Pipeline-to-solve-employee-Attributes-and-Predicting-With-AI

certifications

AWS Certified : Data Engineer Associate : DAE C01 Certification

verify >>> Credly

--> Click on to Verify through AWS Certified Data Engineer Associate Credentials System Portal / LinkindIn

Microsoft Certified : AZURE Data Engineer Associate : DP-203 Certification

verify >>> Microsoft Portal

--> Click on to Verify through Microsoft Certified Azure Data Engineer Associate Credentials System Portal / LinkindIn

Microsoft Certified: Power BI Data Analyst Associate

verify >>> Microsoft Portal

--> Click on to Verify through Microsoft Certified: Power BI Data Analyst Associate Credentials System Portal / LinkindIn

Tableau Desktop Specialist

verify >>> Credly

--> Click on to Verify through Tableau Desktop Specialist Credentials System Portal / LinkindIn

Alteryx Designer Core Micro-Credential: Data Transformation

verify >>> Credly

--> Click on to Verify through Alteryx Designer Core Micro-Credential: Data Transformation Credentials System Portal / LinkindIn

Databricks Certifications

- Generative AI Fundamentals

verify >>> Databricks Credentials Portal

--> Click on to Verify through Databricks Credentials System Portal / LinkindIn

- Lakehouse Fundamentals

verify >>> Databricks Credentials Portal

--> Click on to Verify through Databricks Credentials System Portal / LinkindIn

White Belt Certiification

by Lean Six Sigma.

verify > LinkedIn

--> Click on to Verify through credentials in Certificate section

Oracle 2023 Associate Certifications

- Cloud Data Management

verify >>> Oracle CertiView

--> Click on to Verify through Oracle CertiView / LinkindIn

- Cloud Infrastructure

verify >>> Oracle CertiView

--> Click on to Verify through Oracle CertiView / LinkindIn

ML Model Monitoring Certification

by Arize.AI (Arize University)

verify > LinkedIn

--> Click on to Verify through credentials in Certificate section

IBM - Cognitive.AI Certifications

Data Analysis for Python >> Click on Buddy to veirfy/view Credly

Spark Fundamentals 1 >> Credly

Hadoop fundamentals 101 >> Credly

Big Data 101 >> Credly

--> Verify License/credentials through linkedIn

SAS Certifications

SAS sql 1: Essentials >> Verify through Credly

SAS Programming 1: Essentials >> Credly

--> Verify License/credentials through linkedIn

Microsoft Technology Associate

Introduction to Programming Using Python

Verify through Credly!

Drive Link

Machine Learning Certification

Machine Learning certification from Stanford University under Andrew NG through Coursera.

- verify through Coursera!

IBM CognitiveClass.io Python Certification

Python for data science.

Verify through Credly!

- verify thorugh Credly!

Mini Project In (The Smart Bridge Pvt. Ltd)

Internet Of Things(IOT)

- Drive Link!

NGO - Social Service - Commitment - Integrity Pledge Certificates

Started from School time....

- Drive Link!

Some Other Certificates

- Drive Link!

Salesforce

Salesforce Certified Platform Developer (PD1) - (DEV 401).

- https://drive.google.com/file/d/1XYV0H92oHVX2Quteif2H2IcPi-hYdvTh/view?usp=sharing

TrailBlazer Account

You can check my --- Salesforce Certified Platform Developer (PD1) - (DEV 401).

- https://trailblazer.me/id/vhj9

• Completed various tasks and build various apps like data model for Recruitment App, Suggestion Box App, Travel Approval App.

• Implemented various other works like Airport Management

Contact

Elements

Text

This is bold and this is strong. This is italic and this is emphasized.

This is superscript text and this is subscript text.

This is underlined and this is code: for (;;) { ... }. Finally, this is a link.

Heading Level 2

Heading Level 3

Heading Level 4

Heading Level 5

Heading Level 6

Blockquote

Fringilla nisl. Donec accumsan interdum nisi, quis tincidunt felis sagittis eget tempus euismod. Vestibulum ante ipsum primis in faucibus vestibulum. Blandit adipiscing eu felis iaculis volutpat ac adipiscing accumsan faucibus. Vestibulum ante ipsum primis in faucibus lorem ipsum dolor sit amet nullam adipiscing eu felis.

Preformatted

i = 0;

while (!deck.isInOrder()) {

print 'Iteration ' + i;

deck.shuffle();

i++;

}

print 'It took ' + i + ' iterations to sort the deck.';

Lists

Unordered

- Dolor pulvinar etiam.

- Sagittis adipiscing.

- Felis enim feugiat.

Alternate

- Dolor pulvinar etiam.

- Sagittis adipiscing.

- Felis enim feugiat.

Ordered

- Dolor pulvinar etiam.

- Etiam vel felis viverra.

- Felis enim feugiat.

- Dolor pulvinar etiam.

- Etiam vel felis lorem.

- Felis enim et feugiat.

Icons

Actions

Table

Default

| Name |

Description |

Price |

| Item One |

Ante turpis integer aliquet porttitor. |

29.99 |

| Item Two |

Vis ac commodo adipiscing arcu aliquet. |

19.99 |

| Item Three |

Morbi faucibus arcu accumsan lorem. |

29.99 |

| Item Four |

Vitae integer tempus condimentum. |

19.99 |

| Item Five |

Ante turpis integer aliquet porttitor. |

29.99 |

|

100.00 |

Alternate

| Name |

Description |

Price |

| Item One |

Ante turpis integer aliquet porttitor. |

29.99 |

| Item Two |

Vis ac commodo adipiscing arcu aliquet. |

19.99 |

| Item Three |

Morbi faucibus arcu accumsan lorem. |

29.99 |

| Item Four |

Vitae integer tempus condimentum. |

19.99 |

| Item Five |

Ante turpis integer aliquet porttitor. |

29.99 |

|

100.00 |